Climate Change, Statistical Significance, and Science

A recent plea for scientists to “stop playing dumb on climate change” shows why The New York Times Op Ed page needs a statistician.

Recently, the New York Times published an opinion piece Playing Dumb on Climate Change by Naomi Oreskes, a professor of the history of science at Harvard University, which argues that in the case of climate change, scientists are too conservative in their scientific standards. Scientists adhere to standards that call for 95 percent confidence levels, meaning that if all climate behavior were random fluctuation, there would be less than 5 percent chance that temperatures would be as warm or warmer than they are. In Oreskes’ opinion, scientists ought to use a standard less stringent than 95 percent because there is a plausible causal mechanism for climate change and because the risk from inaction is so great. Unfortunately, to make her argument, the author confuses several different aspects of confidence, evidence, belief, and decision-making.

The purpose here is to point out the confusion and clarify the statistical issues. This article is not about climate change; it’s about statistics. Oreskes’ mistaken interpretation of these statistical ideas do not imply that climate change is under question; the evidence for climate change consists of mechanistic as well as statistical arguments, and has little to do with the topic under discussion here: a misinterpretation of what is called the p-value, a statistic that tells us the probability of seeing high temperatures if they were occurring just by chance. The American Statistical Association already has a statement on climate change, and nothing in this article is intended to contradict the ASA’s statement or to deny the reality of anthropogenic climate change. This article is only about the proper use and interpretation of statistics.

The piece begins by noting that “Science is conservative, and new claims of knowledge are greeted with high degrees of skepticism. When Copernicus said the Earth orbited the sun, when Wegener said the continents drifted, and when Darwin said species evolved by natural selection, the burden of proof was on them to show that it was so. In the 18th and 19th centuries, this conservatism generally took the form of a demand for a large amount of evidence; in the 20th century, it took on the form of a demand for statistical significance.” So far, so good: Many scientists and statisticians demand statistical significance at 5 percent. But it is important to note that “significance” and “confidence,” as used below, are technical terms in statistics and should not be interpreted according to their usual colloquial meaning.

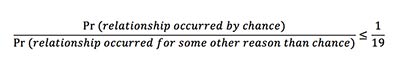

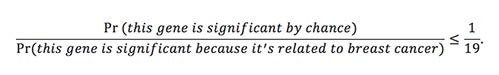

“Typically, scientists apply a 95 percent confidence limit, meaning that they will accept a causal claim only if they can show that the odds of the relationship’s occurring by chance are no more than one in 20,” writes Oreskes, which in light of the full article, suggests she thinks that “odds of the relationship’s occurring by chance are no more than one in 20,” means, in technical notation, that:

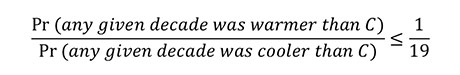

—where we note that a chance of 1/20 is the same as odds of 1/19 (the odds of an event are given by the chance of the event, divided by the chance the event does not occur). But this interpretation of 95 percent confidence is not correct. To understand why, suppose data show that the last decade was warmer than previous decades. Using statistical theory and climatological data and models, we can determine how much year-to-year variation there is in temperature and find the upper 5 percent of that variation; that is, we can find a cut-off temperature C, at which about 5 percent of all decades are warmer and about 95 percent of the decades cooler. We only consider decades in recent history—recent enough so that the climate was roughly like it would be today without climate change. By choosing the critical temperature C this way, there is only about one decade warmer than C for every 19 decades cooler than C, or about one in 20. To put it in mathematical language, let “Pr” denote “probability,” and then using this critical temperature, the odds that a decade is warmer than the temperature C is:

The probabilities are calculated under climatological and statistical models for recent climate assuming there is no climate change. Finally, if the most recent decade’s temperature is C or more, we call it significant at the 5 percent level, because the chance that the temperature would be that high assuming only random fluctuation is at most 5 percent.

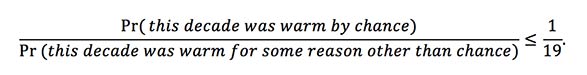

Importantly, significance at the 5 percent level does not mean:

It’s important to note that the calculation of the how likely the data would be to occur assuming it’s random does not determine the reasons behind the data that we see, even though it may contribute evidence in the bigger picture for reasons behind climate change.

Also, when Oreskes says scientists treat the “95 percent confidence limit” as a “causal claim” she is wrong. The confidence level (and the confidence interval) speaks to the probability that we would see temperatures this warm or warmer if they were simply random fluctuations. No causes can be interpreted, regardless of how the chips fall based on temperature data alone. It may be unlikely for the data to occur if they were all random fluctuations, but the reasons that the data did occur cannot be deduced from this simple observation.

To continue the illustration, let’s examine other decades. Imagine we had data from 1000 BC to 0 BC. That’s 100 decades, none of which had global warming caused by humans; nevertheless, we know just by chance that about 5 percent will have had temperatures above the critical threshold C. Those decades would be labeled significant by the 95 percent rule even though we would make no causal claim and even though we know they occurred by chance. (Often when we examine many things such as the 100 decades here, we use a stricter standard than 95 percent for declaring significance. We’re ignoring the stricter standard here.)

For another example, consider the question of how many genes are associated with breast cancer. We have samples of people with and without breast cancer and, for each variant of each gene, we check whether its prevalence is different, or about the same, in the two groups of people. (We’re over simplifying again, for the purpose of illustration.) Let’s use 10,000 as the number of genes for our calculation even though there are many more. Just by chance we would find that about 5 percent of the 10,000 genes, or about 500, would be labeled significant because they have variants that randomly occur more frequently among the breast cancer patients in our sample. And if there were a small number of genes—say three—that really did affect the probability of developing breast cancer, they would likely be labeled significant too, so there would be a total of about 503 genes labeled significant. Yet about 500 of them would be spurious and three would not— but based on the statistics, they would all be labeled significant together; we wouldn’t know how many or which ones are spurious and how many or which ones are not.

So the words “odds of occurring by chance,” ought to refer to the fact that only about 500 of the 10,000 genes not involved in breast cancer are found to be significant or to the fact that about 5 percent of all decades are significantly warmer than usual. But is that what Oreskes meant? Her piece continues, “if there’s more than even a scant 5 percent possibility that an event occurred by chance, scientists will reject the causal claim. It’s like not gambling in Las Vegas even though you had a nearly 95 percent chance of winning.” “Winning” sounds like identifying a gene that’s implicated in breast cancer or like offering a convincing demonstration that humans are changing the climate.

In this hypothetical case of breast cancer genes, a gene identified as significant still has a very small chance of being causally related to breast cancer; only 3 out of 503 identified genes. The odds that a gene identified as significant is actually related to cancer are 3/500. Oreskes’ phrasing suggests she believes scientists are upholding a rule that only declares significance when the odds of such a gene being implicated in cancer is very high – over 95 percent likely. In other words, she believes that statistical significance occurs when the gene in question is unlikely to be there for reasons of chance alone. In other words, Pr(this gene is significant by chance)<.05, or in terms of odds, it sounds like Oreskes thinks the 5 percent means:

Or, to bring it back to climate change:

As we explained above, that’s not what 5 percent significance and 95 percent confidence mean. It seems the BBC made a similar mistake a few years ago, when it attempted to describe research results declaring global warming statistically significant, stating, “scientists use a minimum threshold of 95% to assess whether a trend is likely to be down to an underlying cause, rather than emerging by chance.” Well, no: the minimum threshold of 95 percent reflects a judgment of how likely the data are to occur if we assume they emerged by chance (less than 5 percent likely, if the 95 percent threshold is met).

In the breast cancer example the contribution of statistics was to reduce from about 10,000 to about 500 the number of genes to pursue in future investigations. That’s a real win, a real scientific advance, and one we were very sure of being able to accomplish.

The piece continues, “there have been enormous arguments among statisticians about what a 95 percent confidence level really means.” We disagree; we think almost every statistician would accept our explanation of what a 95 percent confidence level means. But we do agree that many working scientists, the BBC, and Oreskes, a historian, get it wrong.

“But the 95 percent level has no actual basis in nature. It is a convention, a value judgment. The value it reflects is one that says that the worst mistake a scientist can make is to think an effect is real when it is not. This is the familiar “Type 1 error.” You can think of it as being gullible, fooling yourself, or having undue faith in your own ideas. To avoid it, scientists place the burden of proof on the person making an affirmative claim. But this means that science is prone to “Type 2 errors”: being too conservative and missing causes and effects that are really there.”

A Type I error would be labeling “significant” a gene that is truly unrelated to breast cancer. A Type II error would be failing to label a gene that is truly related to breast cancer. As Oreskes notes, we can decrease the number of Type I errors by using a stricter standard for labeling. But that would increase the number of Type II errors. Or we can decrease the number of Type II errors by adopting a looser standard, but that would increase the Type I errors. There is no one right way to balance them, as the piece acknowledges next.

“Is a Type 1 error worse than a Type 2? It depends on your point of view, and on the risks inherent in getting the answer wrong.” But there are no risks associated with either Type I or Type II errors. Risks arise only when we contemplate actions. Type I and Type II errors do not prescribe actions; they describe whether data is consistent with chance mechanisms. Type I errors occur when data generated by chance appear to be inconsistent with chance. Type II errors occur when data not generated by chance — at least not by a null or uninteresting chance mechanism — appear to be consistent with chance.

“The fear of the Type 1 error asks us to play dumb; in effect, to start from scratch and act as if we know nothing. That makes sense when we really don’t know what’s going on, as in the early stages of a scientific investigation. It also makes sense in a court of law, where we presume innocence to protect ourselves from government tyranny and overzealous prosecutors —but there are no doubt prosecutors who would argue for a lower standard to protect society from crime. When applied to evaluating environmental hazards, the fear of gullibility can lead us to understate threats. It places the burden of proof on the victim rather than, for example, on the manufacturer of a harmful product. The consequence is that we may fail to protect people who are really getting hurt.”

Statisticians and scientists know that declarations of significance are different from actions. That’s why we have decision theory. We minimize expected loss and maximize expected utility. We don’t develop therapies that target all the genes found to be significant. We investigate further and we consider probabilities, risks, and utilities in addition to declarations of significance.

“And what if we aren’t dumb? What if we have evidence to support a cause-and-effect relationship? Let’s say you know how a particular chemical is harmful; for example, that it has been shown to interfere with cell function in laboratory mice. Then it might be reasonable to accept a lower statistical threshold when examining effects in people …”

A lower threshold for what: confidence, beliefs, or action? Oreskes talks about 95 percent confidence but she also seems to be calling for us to accept the reality of climate change and to do something about it. To statisticians, confidence, beliefs, and thresholds for actions are different things. Confidence is about the probability that chance mechanisms can produce data similar to, or even more extreme, than the data we’ve seen. Beliefs have to do with assessing which is really responsible for the warm decade: chance or climate change. Action thresholds depend on our beliefs but also on costs, risks and benefits. By not distinguishing between confidence, beliefs, and actions in her call for a lower threshold, Orestes helps perpetuate the confusion surrounding these concepts.

Was the quest for accuracy in measurement and statistical precision an ascetic practice—a response to the clash between science and religion? Or is Oreskes over-simplifying history?—click here

Oreskes points out that “In the case of climate change, we are not dumb at all. We know that carbon dioxide is a greenhouse gas, we know that its concentration in the atmosphere has increased by about 40 percent since the industrial revolution, and we know the mechanism by which it warms the planet.” Knowing these facts about carbon is evidence about possible non-random causes of the data. It may affect our beliefs and our actions, but it does not affect statistical confidence, which is based on probabilities calculated under the assumption that fluctuations are random. Oreskes is again failing to distinguish between confidence and beliefs.

After urging scientists to adopt a threshold less stringent than 95 percent in the case of climate change, the piece continues:

“WHY don’t scientists pick the standard that is appropriate to the case at hand, instead of adhering to an absolutist one? The answer can be found in a surprising place: the history of science in relation to religion. The 95 percent confidence limit reflects a long tradition in the history of science that valorizes skepticism as an antidote to religious faith. Even as scientists consciously rejected religion as a basis of natural knowledge, they held on to certain cultural presumptions about what kind of person had access to reliable knowledge. One of these presumptions involved the value of ascetic practices. Nowadays scientists do not live monastic lives, but they do practice a form of self-denial, denying themselves the right to believe anything that has not passed very high intellectual hurdles.”

Yes, most scientists are skeptics. We do not accept claims lightly, we expect proof, and we try to understand our subject before we speak publicly and admonish others.

Thank goodness.

Please note that this is a forum for statisticians and mathematicians to critically evaluate the design and statistical methods used in studies. The subjects (products, procedures, treatments, etc.) of the studies being evaluated are neither endorsed nor rejected by Sense About Science USA. We encourage readers to use these articles as a starting point to discuss better study design and statistical analysis. While we strive for factual accuracy in these posts, they should not be considered journalistic works, but rather pieces of academic writing.

I have analyzed the temperature record for the last five decades.

The statistical results were compelling.

Will contact you by U.S. mail if you are interested in the information.

The excitement wrt the 2014 “record” was not substantial.

How significant are the last five decades, given that the Industrial Age started over two hundred years ago. Five decades would be less than a quarter of the data set, wouldn’t it?

I have analyzed the temperature record for the last five decades.

The statistical results were compelling.

Will contact you by U.S. mail if you are interested in the information.

The excitement wrt the 2014 “record” was not substantial.

Philip, I think your point is spot on. Almost all of the climate models exhibit degrees of variable bias that may lead to incorrect predictions. Also, small changes in the estimates of predictor variables (the estimated impacts of aerosol and radiative forcing is but one example) can have dramatic impacts on the lower limits of climate sensitivity equilibrium estimates. AR4 estimated the range at 2-4.5 C, while AR5’s estimate is 1.5-4.5 C.

I am not a skeptic, climate change is real. But the underlying variability in predictor variables for complex systems leads me to be cautious about model predictions and long term accuracy. Add in the inherent inaccuracy of the historical data do to the large discrepancy in older measurement systems and data management, what are we missing that is critical?

Steve, the data are not limited solely to human records and measurements. There are additional records in the form of tree rings, ice samples, and other sources that can be not only compared with human records, but compared within themselves. These allow scientists to indicate a massive uptick in atmospheric changes shortly after the start of the Industrial Revolution.

Your concerns about the accuracy and availability of entirely human records are well-founded, but they are not the entire story.

How significant are the last five decades, given that the Industrial Age started over two hundred years ago. Five decades would be less than a quarter of the data set, wouldn’t it?

It is interesting but disconcerting that the people who accept the premise that climate change is anthropogenic still focus on the fact that CO2, a minor component of greenhouse gas,is responsible for the increase in air temperature and the decrease in oceans’ pH.The main source of our energy comes from the burning of fossil fuels (80%). Fuels do give off CO2 as a by-product but their main function is to provide HEAT: (definition of fuel). The heat emitted by our energy use is more than four times the amount that can be accounted for by the measured rise in air temperature. Why is it that the “scientists” never mention this when asserting that CO2 causes global warming? Their claim is based mainly on correlations of CO2 and temperature and their belief that CO2 is/was the cause of rising temperatures. There are no correlations for which it can be shown that CO2, rather than another contributor, is the cause.

Even nuclear power which is being promoted as a “CO2-free” solution emits twice as much heat to the environment as it converts to electricity, and is being promoted here and in India where U.S. technology is to be used to construct nuclear plants in India.

What I have stated above is fact! My next comment will be opinion. The lowering of pH (“acidification”) of the oceans may be caused, not by CO2, but by acid rain created by the burning of 2000 million tonnes of coal a year. Coal contains ~1% of sulfur (equivalent to 60 million tonnes of sulfuric acid) and the is very likely to be the cause of the deterioration of the coral reefs and the loss of protective shells for some ocean creatures.

True, no one talks about the heat generated by burning fuel of any kind, and released to the environment via cooling tower, HX etc. strange that.

Interesting comment that it is a fact that heat emitted by our energy use is more than four times more than rise in temperature. How did you get to this fact?

BTW, CO2 is not “a minor component of greenhouse gas”, it IS a greenhouse gas, so is water vapor. Methane is even more potent as a greenhouse gas than CO2, for example, although it is very short-lived in the atmosphere, turning into CO2 after a relatively short time.

Your speculation that generation of power is the dominant source of atmospheric heating is an interesting one, as is your speculation that climate scientists have completely ignored it. Do you have any figures on how many BTUs of heat are generated by mankind? Do you have any figures on the relative contribution of that heat to the over-all warming trend?

I’ve always enjoyed the rhetorical tactic of diminishing an opponent through the use of quotation marks. If a scientist disagrees with you, or even merely fails to support you, simply demote them to a “scientist”. After all, they cannot be a “real” scientist if they disagree, right? While emotionally satisfying, it does not actually change the validity of a statement, neither by the “scientist”, nor the person denigrating them. As a source of information, it has very close to zero content, except to the extent that it reveals a non-quantifiable bias on the part of the speaker.

I agree that acid rain may be a significant contribution to the acidification of the oceans. I see no reason, however, to decide that CO2, which also forms an acid in water is not a significant factor as well. According to one article, there are approximately 400 parts per million of CO2 in our atmosphere at this time. That article goes on to claim that EACH part per million represents 2.13 gigatonnes of carbon, some 852 gigatonnes of carbon at current levels. Assuming, for the sake of simplicity, that 100% of that atmospheric carbon is in CO2 (some of it is doubtless in other compounds, such as methane), that means that there is 14,200 times as much CO2 available to form acid in the ocean as there is SO2, per your figure of 60 megatonnes.

Granted, you stated it was an opinion, not a fact. I merely point out that implying that atmospheric SO2 is the dominant (sole?) form of acidification while ignoring a much more common acid-forming gas may be a mistake. At any rate, it is NOT a simple either/or situation; clearly, SO2 can contribute to the problem. Equally clearly, it is not the sole contribution to the problem, nor is it likely to be even the dominant contribution, unless there is some additional chemistry factor of which I’m unaware.

So, my opinion is that the numbers indicate that you may be mistaken in assuming that SO2 has a strong enough effect on ocean acidification that CO2 can be safely minimized or ignored.

It is interesting but disconcerting that the people who accept the premise that climate change is anthropogenic still focus on the fact that CO2, a minor component of greenhouse gas,is responsible for the increase in air temperature and the decrease in oceans’ pH.The main source of our energy comes from the burning of fossil fuels (80%). Fuels do give off CO2 as a by-product but their main function is to provide HEAT: (definition of fuel). The heat emitted by our energy use is more than four times the amount that can be accounted for by the measured rise in air temperature. Why is it that the “scientists” never mention this when asserting that CO2 causes global warming? Their claim is based mainly on correlations of CO2 and temperature and their belief that CO2 is/was the cause of rising temperatures. There are no correlations for which it can be shown that CO2, rather than another contributor, is the cause.

Even nuclear power which is being promoted as a “CO2-free” solution emits twice as much heat to the environment as it converts to electricity, and is being promoted here and in India where U.S. technology is to be used to construct nuclear plants in India.

What I have stated above is fact! My next comment will be opinion. The lowering of pH (“acidification”) of the oceans may be caused, not by CO2, but by acid rain created by the burning of 2000 million tonnes of coal a year. Coal contains ~1% of sulfur (equivalent to 60 million tonnes of sulfuric acid) and the is very likely to be the cause of the deterioration of the coral reefs and the loss of protective shells for some ocean creatures.

True, no one talks about the heat generated by burning fuel of any kind, and released to the environment via cooling tower, HX etc. strange that.

Interesting comment that it is a fact that heat emitted by our energy use is more than four times more than rise in temperature. How did you get to this fact?

BTW, CO2 is not “a minor component of greenhouse gas”, it IS a greenhouse gas, so is water vapor. Methane is even more potent as a greenhouse gas than CO2, for example, although it is very short-lived in the atmosphere, turning into CO2 after a relatively short time.

Your speculation that generation of power is the dominant source of atmospheric heating is an interesting one, as is your speculation that climate scientists have completely ignored it. Do you have any figures on how many BTUs of heat are generated by mankind? Do you have any figures on the relative contribution of that heat to the over-all warming trend?

I’ve always enjoyed the rhetorical tactic of diminishing an opponent through the use of quotation marks. If a scientist disagrees with you, or even merely fails to support you, simply demote them to a “scientist”. After all, they cannot be a “real” scientist if they disagree, right? While emotionally satisfying, it does not actually change the validity of a statement, neither by the “scientist”, nor the person denigrating them. As a source of information, it has very close to zero content, except to the extent that it reveals a non-quantifiable bias on the part of the speaker.

I agree that acid rain may be a significant contribution to the acidification of the oceans. I see no reason, however, to decide that CO2, which also forms an acid in water is not a significant factor as well. According to one article, there are approximately 400 parts per million of CO2 in our atmosphere at this time. That article goes on to claim that EACH part per million represents 2.13 gigatonnes of carbon, some 852 gigatonnes of carbon at current levels. Assuming, for the sake of simplicity, that 100% of that atmospheric carbon is in CO2 (some of it is doubtless in other compounds, such as methane), that means that there is 14,200 times as much CO2 available to form acid in the ocean as there is SO2, per your figure of 60 megatonnes.

Granted, you stated it was an opinion, not a fact. I merely point out that implying that atmospheric SO2 is the dominant (sole?) form of acidification while ignoring a much more common acid-forming gas may be a mistake. At any rate, it is NOT a simple either/or situation; clearly, SO2 can contribute to the problem. Equally clearly, it is not the sole contribution to the problem, nor is it likely to be even the dominant contribution, unless there is some additional chemistry factor of which I’m unaware.

So, my opinion is that the numbers indicate that you may be mistaken in assuming that SO2 has a strong enough effect on ocean acidification that CO2 can be safely minimized or ignored.

I appreciate the argument here, that those outside the field of statistics (including many scientists) misuse data and mis-state conclusions.

But at the same time, the point Oreskes is trying to make resonates with me and I regret that she did a poor job of expressing it in language acceptable to statisticians.

The claim that scientific pronouncements should be made from a “neutral” or “objective” perspective (i.e. not in advocacy of a particular action), though noble, is actually spurious and contributes to a moral vacuum.

People in general take action based on emotion. That’s not a bad thing. It’s the way we’ve evolved to behave. Since scientific findings are couched in as emotion-free format as possible, it’s too easy to ignore facts (of climate change, in this case.) Meanwhile, scientists, like everyone else, have value systems. And we in the general public (who assume – correctly! – that people communicate based on their value system) might fail to take warranted action because the message is being delivered in too neutral of a way.

Oreskes, perhaps naively, tries to turn her critique into a plan of reform of statistical analysis. Personally, I long for scientists to appear on TV and passionately communicate that it’s time for us to wake up and take drastic action before it’s too late.

I would urge you to read the paper by Lenny Smith linked to in the companion piece to this article (whether Oreskes was spinning the history of science too). Arguing passionately without understanding statistics may end up being hugely counterproductive. Why will scientists outside of climate science, for instance, who do understand statistics and uncertainty, take climate science seriously if it doesn’t communicate that uncertainty?

http://www.lse.ac.uk/CATS/Publications/Publications%20PDFs/Smith-Petersen-Variations-on-reliability-2014.pdf

If scientists in 1900 had done as you recommend then eugenics would have been a mainstream ed rather than limited to racist backwaters. Millions of people suffered and died from peptic ulcers because scientists knew they were caused by excess stomach acid when in fact the vast majority are caused by heliobacter pylori – an easily treated bacteria.

My reaction to claims that we “know” so much that we can do away with the skeptical conventions of science comes from Inigo Montoya in the Princess Bride: “you keep using that word (know). I don’t think it means what you think it does.”

Mr. Reeves,

There are typos in your comment which make it unclear, so I beg your forgiveness if I misunderstand your meaning… Eugenics was most decidedly not limited to racist backwaters. It was cutting edge science in its day, and had widespread support from coast to coast, and up to the highest court in the land. Chief Justice Oliver Wendell Holmes wrote the majority opinion in Buck v. Bell (1926), which may be the single worst decision ever from SCOTUS (far worse than Dred Scott or Plessy).

From what I have read Eugenics was quite mainstream in the early 1900s.

“The claim that scientific pronouncements should be made from a “neutral” or “objective” perspective (i.e. not in advocacy of a particular action), though noble, is actually spurious and contributes to a moral vacuum.”

What is the alternative? Making pronouncements from a position of belief. This is by definition not science, it is dogma and should be treated as such.

“People in general take action based on emotion. That’s not a bad thing.”

History has shown us repeatedly that this is a very bad thing (unless of course you are the one manipulating emotions of others for your own gain).

I think those critiquing Oreskes are largely missing the point she is making.

Different fields use different p-levels depending on how great the impact of an error will be. For example, if we were manufacturing parts for a space shuttle, we would want the parts to pass safety testing again and again and again (let’s say that we are looking for a p-value for safety to be at .001 – i.e. a 99.9% confidence level) whereas we might require a lower threshold for the parts of a toy airplane (i.e a p-value of .10, or a 90% confidence level.)

What Oreskes means about climate change models being too conservative in their projections is that many models we have created in the past to understand climate change have underestimated the change that was seen in subsequent years because they insisted on a p-value of .05 in the projection. This is because climate scientists use the most commonly-used (but not necessarily most valid) p-level standard of a 95% confidence level.

Oreskes’s point is that we should be willing to accept a lower level of confidence in our projections because anthropogenic climate change from GHG emissions is something that we are still rather unskilled in predicting. Other factors that we do not know about or do not often include are causing these underestimates. However, this does not mean that we should continue making low estimates. The cost of understating the urgency of action on climate change is disastrous, and models which don’t include possible outcomes at, say, a 90% confidence level, might fail to include the high magnitude of possible changes to the climate.

Oreskes’s point is not that climate science should not be rigorous. It’s that we should ease up on what we are willing to consider significant in the context of climate change, because ignoring analyses that have p-values between .05 and .10 is not helpful to fighting global warming.

We do not need exact projections. We need fundamental change in how we use our natural resources. Allowing more science that supports that change to be published when we are dealing with something as complex and crucial to life as global climate change is not degrading to the science as much as it is empowering to activists, land managers, and other key decision-makers who need to show why immediate and strong action on climate change must be taken.

I think the point of our critiques is that she did no credit to her argument by getting basic statistical concepts wrong, or defending her viewpoint with a kind of cartoon explanation for why science values precision.

I appreciate the argument here, that those outside the field of statistics (including many scientists) misuse data and mis-state conclusions.

But at the same time, the point Oreskes is trying to make resonates with me and I regret that she did a poor job of expressing it in language acceptable to statisticians.

The claim that scientific pronouncements should be made from a “neutral” or “objective” perspective (i.e. not in advocacy of a particular action), though noble, is actually spurious and contributes to a moral vacuum.

People in general take action based on emotion. That’s not a bad thing. It’s the way we’ve evolved to behave. Since scientific findings are couched in as emotion-free format as possible, it’s too easy to ignore facts (of climate change, in this case.) Meanwhile, scientists, like everyone else, have value systems. And we in the general public (who assume – correctly! – that people communicate based on their value system) might fail to take warranted action because the message is being delivered in too neutral of a way.

Oreskes, perhaps naively, tries to turn her critique into a plan of reform of statistical analysis. Personally, I long for scientists to appear on TV and passionately communicate that it’s time for us to wake up and take drastic action before it’s too late.

I would urge you to read the paper by Lenny Smith linked to in the companion piece to this article (whether Oreskes was spinning the history of science too). Arguing passionately without understanding statistics may end up being hugely counterproductive. Why will scientists outside of climate science, for instance, who do understand statistics and uncertainty, take climate science seriously if it doesn’t communicate that uncertainty?

http://www.lse.ac.uk/CATS/Publications/Publications%20PDFs/Smith-Petersen-Variations-on-reliability-2014.pdf

If scientists in 1900 had done as you recommend then eugenics would have been a mainstream ed rather than limited to racist backwaters. Millions of people suffered and died from peptic ulcers because scientists knew they were caused by excess stomach acid when in fact the vast majority are caused by heliobacter pylori – an easily treated bacteria.

My reaction to claims that we “know” so much that we can do away with the skeptical conventions of science comes from Inigo Montoya in the Princess Bride: “you keep using that word (know). I don’t think it means what you think it does.”

Mr. Reeves,

There are typos in your comment which make it unclear, so I beg your forgiveness if I misunderstand your meaning… Eugenics was most decidedly not limited to racist backwaters. It was cutting edge science in its day, and had widespread support from coast to coast, and up to the highest court in the land. Chief Justice Oliver Wendell Holmes wrote the majority opinion in Buck v. Bell (1926), which may be the single worst decision ever from SCOTUS (far worse than Dred Scott or Plessy).

From what I have read Eugenics was quite mainstream in the early 1900s.

Steve, the data are not limited solely to human records and measurements. There are additional records in the form of tree rings, ice samples, and other sources that can be not only compared with human records, but compared within themselves. These allow scientists to indicate a massive uptick in atmospheric changes shortly after the start of the Industrial Revolution.

Your concerns about the accuracy and availability of entirely human records are well-founded, but they are not the entire story.

“The claim that scientific pronouncements should be made from a “neutral” or “objective” perspective (i.e. not in advocacy of a particular action), though noble, is actually spurious and contributes to a moral vacuum.”

What is the alternative? Making pronouncements from a position of belief. This is by definition not science, it is dogma and should be treated as such.

“People in general take action based on emotion. That’s not a bad thing.”

History has shown us repeatedly that this is a very bad thing (unless of course you are the one manipulating emotions of others for your own gain).

I think those critiquing Oreskes are largely missing the point she is making.

Different fields use different p-levels depending on how great the impact of an error will be. For example, if we were manufacturing parts for a space shuttle, we would want the parts to pass safety testing again and again and again (let’s say that we are looking for a p-value for safety to be at .001 – i.e. a 99.9% confidence level) whereas we might require a lower threshold for the parts of a toy airplane (i.e a p-value of .10, or a 90% confidence level.)

What Oreskes means about climate change models being too conservative in their projections is that many models we have created in the past to understand climate change have underestimated the change that was seen in subsequent years because they insisted on a p-value of .05 in the projection. This is because climate scientists use the most commonly-used (but not necessarily most valid) p-level standard of a 95% confidence level.

Oreskes’s point is that we should be willing to accept a lower level of confidence in our projections because anthropogenic climate change from GHG emissions is something that we are still rather unskilled in predicting. Other factors that we do not know about or do not often include are causing these underestimates. However, this does not mean that we should continue making low estimates. The cost of understating the urgency of action on climate change is disastrous, and models which don’t include possible outcomes at, say, a 90% confidence level, might fail to include the high magnitude of possible changes to the climate.

Oreskes’s point is not that climate science should not be rigorous. It’s that we should ease up on what we are willing to consider significant in the context of climate change, because ignoring analyses that have p-values between .05 and .10 is not helpful to fighting global warming.

We do not need exact projections. We need fundamental change in how we use our natural resources. Allowing more science that supports that change to be published when we are dealing with something as complex and crucial to life as global climate change is not degrading to the science as much as it is empowering to activists, land managers, and other key decision-makers who need to show why immediate and strong action on climate change must be taken.

I think the point of our critiques is that she did no credit to her argument by getting basic statistical concepts wrong, or defending her viewpoint with a kind of cartoon explanation for why science values precision.

Oreskes’ arguments in favor of relaxing the statistical standards in the case of AGW are merely statements of belief made as if they were statements of fact. We do NOT know that CO2 is a “greenhouse gas” – the statement begs the question. Even the “A” in AGW is a statement of belief.

Ironically, there are statements of FACT, based on observations, the the proponents of AGW refuse to acknowledge. For example that the predictions of the majority of climate models have been contradicted by observations. Or the observation that the data of prominent promoters of AGW have been denied to other investigators by legal action. Or the observation that there is clear conflict of interest evidenced in producing the “right” answers as a requirement for being funded for further research.

Climate science does not pass the statistical test OR the smell test!

We do know that CO2 is a greenhouse gas. That has been proven in carefully controlled experiments. What we do not know for certain is that increased levels of CO2 are responsible for atmospheric warming. What we are even less certain about is how much warming will occur or whether that warming is good or bad. And most importantly whether the warming can be arrested in a cost effective manner.

Oreskes seems to want us to put all those uncertainties aside and just accept the alarmist narrative and policy recommendation. I for one think that is a silly approach to a very complex problem.

i’m a climate skeptic – yet i – and many many other skeptics – recognize that CO2 works like a “greenhouse gas” – if not exactly like a greenhouse – the issue is whether the amplification of CO2’s effect can be as dire as Alarmists claim

errr – “climate skeptic” = “global warming skeptic”

How do you define a “greenhouse gas” that somehow implies that it is NOT known that CO2 belongs in that class? I wasn’t aware that any chemist, let alone any climate scientist has ever disputed that CO2 is a greenhouse gas, so I am very puzzled by your assertion. How do you define “greenhouse gas”? Does it notably differ from the definition that scientists are using?

Yes, the computer models still have difficulty making accurate predictions, although they are being refined all the time. The observations, however, are not in question. There is more CO2 in the atmosphere than there has been in quite a long time. That is established. And temperatures, as a global average, have been going up over the last couple of hundred years. That, too, is established. That the computer models may not mirror the observations is not an argument against AGW by itself. Indeed, I rather doubt that climate scientists came to the conclusion that AGW warming is real based on their computer models. I rather suspect that they came to that conclusion based on the observed data, and are now trying to create computer models that will allow them to make more accurate predictions than “it’s going to get hotter”.

We already have a good idea that it’s going to get hotter; we need accurate models to predict HOW MUCH hotter, and HOW FAST. We also need models that will tell us which areas will have droughts and which will get deluges due to increased evaporation, a better idea of how fast the ice is melting, and how that will affect the rest of us. Those are the areas that the models are failing in.

A better understanding of the actual processes involved will also enable us to understand whether AGW can be mitigated or reversed, or whether we have to simply hope. Accurate computer models would certainly help in that process, because they would help determine policies and processes.

I might also point out that over-reliance on computer models as an argument is problematic: Up until a few years ago, they could not create a computer model of a star going nova. No matter how much data they poured in, the simulated star wouldn’t go nova. No one was foolish enough to claim that novae weren’t real based solely on the fact the computer sims didn’t work.

Oreskes’ arguments in favor of relaxing the statistical standards in the case of AGW are merely statements of belief made as if they were statements of fact. We do NOT know that CO2 is a “greenhouse gas” – the statement begs the question. Even the “A” in AGW is a statement of belief.

Ironically, there are statements of FACT, based on observations, the the proponents of AGW refuse to acknowledge. For example that the predictions of the majority of climate models have been contradicted by observations. Or the observation that the data of prominent promoters of AGW have been denied to other investigators by legal action. Or the observation that there is clear conflict of interest evidenced in producing the “right” answers as a requirement for being funded for further research.

Climate science does not pass the statistical test OR the smell test!

We do know that CO2 is a greenhouse gas. That has been proven in carefully controlled experiments. What we do not know for certain is that increased levels of CO2 are responsible for atmospheric warming. What we are even less certain about is how much warming will occur or whether that warming is good or bad. And most importantly whether the warming can be arrested in a cost effective manner.

Oreskes seems to want us to put all those uncertainties aside and just accept the alarmist narrative and policy recommendation. I for one think that is a silly approach to a very complex problem.

i’m a climate skeptic – yet i – and many many other skeptics – recognize that CO2 works like a “greenhouse gas” – if not exactly like a greenhouse – the issue is whether the amplification of CO2’s effect can be as dire as Alarmists claim

errr – “climate skeptic” = “global warming skeptic”

How do you define a “greenhouse gas” that somehow implies that it is NOT known that CO2 belongs in that class? I wasn’t aware that any chemist, let alone any climate scientist has ever disputed that CO2 is a greenhouse gas, so I am very puzzled by your assertion. How do you define “greenhouse gas”? Does it notably differ from the definition that scientists are using?

Yes, the computer models still have difficulty making accurate predictions, although they are being refined all the time. The observations, however, are not in question. There is more CO2 in the atmosphere than there has been in quite a long time. That is established. And temperatures, as a global average, have been going up over the last couple of hundred years. That, too, is established. That the computer models may not mirror the observations is not an argument against AGW by itself. Indeed, I rather doubt that climate scientists came to the conclusion that AGW warming is real based on their computer models. I rather suspect that they came to that conclusion based on the observed data, and are now trying to create computer models that will allow them to make more accurate predictions than “it’s going to get hotter”.

We already have a good idea that it’s going to get hotter; we need accurate models to predict HOW MUCH hotter, and HOW FAST. We also need models that will tell us which areas will have droughts and which will get deluges due to increased evaporation, a better idea of how fast the ice is melting, and how that will affect the rest of us. Those are the areas that the models are failing in.

A better understanding of the actual processes involved will also enable us to understand whether AGW can be mitigated or reversed, or whether we have to simply hope. Accurate computer models would certainly help in that process, because they would help determine policies and processes.

I might also point out that over-reliance on computer models as an argument is problematic: Up until a few years ago, they could not create a computer model of a star going nova. No matter how much data they poured in, the simulated star wouldn’t go nova. No one was foolish enough to claim that novae weren’t real based solely on the fact the computer sims didn’t work.

Philip, I was once very excited by the idea that all the energy we generate might account for a, um, significant portion of the observed increase in avg global temperature. I did some simple calculations — maybe the right ones, maybe not — and concluded that the heat we generate is infinitesimally small compared with the solar radiation already bathing the planet. Quite disappointing. I’d be very interested to see a reference for your claim.

Philip, I was once very excited by the idea that all the energy we generate might account for a, um, significant portion of the observed increase in avg global temperature. I did some simple calculations — maybe the right ones, maybe not — and concluded that the heat we generate is infinitesimally small compared with the solar radiation already bathing the planet. Quite disappointing. I’d be very interested to see a reference for your claim.

How sad that the author of this article feels that he must shield himself from the climate change zealots who will attack anyone who does not bow to their belief in global warming. Thus Mr. Lavine cringes and makes obeisiance to global warming beliefs before he argues his point. I’m a scientist and I don’t care about consensus or peer pressure. Man-made global warming is a hoax. I’m unafraid to say so. So should Mr. Levine.

How sad that the author of this article feels that he must shield himself from the climate change zealots who will attack anyone who does not bow to their belief in global warming. Thus Mr. Lavine cringes and makes obeisiance to global warming beliefs before he argues his point. I’m a scientist and I don’t care about consensus or peer pressure. Man-made global warming is a hoax. I’m unafraid to say so. So should Mr. Levine.

” most scientists are skeptics. We do not accept claims lightly, we expect proof, and we try to understand our subject before we speak publicly and admonish others.”

Totally contrary to the history of the climate science crusade and modern science in general. Science has no quality control. No one ever checks anyone else’s work. Look at the ready acceptance of the hockey stick despite all the incompetent and amateurish errors.

” most scientists are skeptics. We do not accept claims lightly, we expect proof, and we try to understand our subject before we speak publicly and admonish others.”

Totally contrary to the history of the climate science crusade and modern science in general. Science has no quality control. No one ever checks anyone else’s work. Look at the ready acceptance of the hockey stick despite all the incompetent and amateurish errors.

Philip – First – Anthropogenic heat generation DOES affect LOCAL regions. It’s called “Urban Heat Island”(UHI) effect and disappears at some relatively short distance from the source (city). It is not a major contributor to temp increases. And, given an 18+ year pause in global temps with a purported 40% rise in CO2, neither is CO2.

Second – acidification. The most recent study claims catastrophic levels of CO2 entering the oceans – based on a cherry-picked data set. Only post-1988 data was considered for the study and over 100 years of previous data was ignored. IF that 100 years of data is factored into the results – SURPRISE – there is not increase in long term acidification. Acidification is just the latest in a long string of purported reasons (?) for EVERYONE to hand over control of the economy and our lives to the government. Remember the “coral scare”? You can find a few more in other places – including a new website detailing climate change prediction failures: http://climatechangepredictions.org/

Philip – First – Anthropogenic heat generation DOES affect LOCAL regions. It’s called “Urban Heat Island”(UHI) effect and disappears at some relatively short distance from the source (city). It is not a major contributor to temp increases. And, given an 18+ year pause in global temps with a purported 40% rise in CO2, neither is CO2.

Second – acidification. The most recent study claims catastrophic levels of CO2 entering the oceans – based on a cherry-picked data set. Only post-1988 data was considered for the study and over 100 years of previous data was ignored. IF that 100 years of data is factored into the results – SURPRISE – there is not increase in long term acidification. Acidification is just the latest in a long string of purported reasons (?) for EVERYONE to hand over control of the economy and our lives to the government. Remember the “coral scare”? You can find a few more in other places – including a new website detailing climate change prediction failures: http://climatechangepredictions.org/

Glad to see a clear discussion here. I will use the breast cancer gene computation to help make a point in an article I’m writing, thanks.

We had some related discussion of the Oreskes article on my blog: http://errorstatistics.com/2015/01/04/significance-levels-made-whipping-boy-on-climate-change-evidence-is-05-too-strict/

Glad to see a clear discussion here. I will use the breast cancer gene computation to help make a point in an article I’m writing, thanks.

We had some related discussion of the Oreskes article on my blog: http://errorstatistics.com/2015/01/04/significance-levels-made-whipping-boy-on-climate-change-evidence-is-05-too-strict/

I realize that this post is focused on issues in the U.S.A., but it might be worth noting the situation in the U.K.

In autumn 2013, the IPCC published the first volume of its Fifth Assessment Report (AR5). That volume was devoted to the scientific basis of climate change. After the publication, I wrote a critique of the statistical analyses in the volume. The critique concluded that the statistical analyses are incompetent.

The critique was submitted to the U.K. Department of Energy and Climate Change, by Lord Donoughue. That led to a meeting at the Department: with the Department’s Under Secretary of State and the Department’s Chief Scientific Adviser, among others. The contents of the meeting were agreed to be confidential. What is a matter of public record, though, is that twelve days later, on 2014-01-21, the Under Secretary of State, speaking on behalf of the U.K. government, informed Parliament of the following.

This means that, for global warming, the U.K. government is no longer using or relying on statistical analysis of observational evidence; rather, the government relies solely on computer simulations of the climate system. That was a major change.

It is not possible to draw statistical inferences from climatic data, with our present understanding. Details are in my critique, which does not require training in statistics to understand:

http://www.informath.org/AR5stat.pdf

I realize that this post is focused on issues in the U.S.A., but it might be worth noting the situation in the U.K.

In autumn 2013, the IPCC published the first volume of its Fifth Assessment Report (AR5). That volume was devoted to the scientific basis of climate change. After the publication, I wrote a critique of the statistical analyses in the volume. The critique concluded that the statistical analyses are incompetent.

The critique was submitted to the U.K. Department of Energy and Climate Change, by Lord Donoughue. That led to a meeting at the Department: with the Department’s Under Secretary of State and the Department’s Chief Scientific Adviser, among others. The contents of the meeting were agreed to be confidential. What is a matter of public record, though, is that twelve days later, on 2014-01-21, the Under Secretary of State, speaking on behalf of the U.K. government, informed Parliament of the following.

This means that, for global warming, the U.K. government is no longer using or relying on statistical analysis of observational evidence; rather, the government relies solely on computer simulations of the climate system. That was a major change.

It is not possible to draw statistical inferences from climatic data, with our present understanding. Details are in my critique, which does not require training in statistics to understand:

http://www.informath.org/AR5stat.pdf

We know that only 3-4% of atmospheric CO2 is produced by manmade activities. So how can we assume that manmade activities are responsible for a significant percentage of global temperature fluctuation?

Answer: we can’t.

We know that only 3-4% of atmospheric CO2 is produced by manmade activities. So how can we assume that manmade activities are responsible for a significant percentage of global temperature fluctuation?

Answer: we can’t.

While I agree with the contents of the article, I have discussed the potential that the nature of the scientific enterprise with its emphasis on Type I error avoidance versus that of the Professional with the emphasis on Type II error avoidance might be another consideration in the discussion. In this article the emphasis on the type of error, I’m of the opinion that the nature of the environment/institution/mindset has to be considered in the mix.

I’ve presented the idea at the website below:

http://achemistinlangley.blogspot.com/2015/01/issues-in-communicating-climate-risks.html

While I agree with the contents of the article, I have discussed the potential that the nature of the scientific enterprise with its emphasis on Type I error avoidance versus that of the Professional with the emphasis on Type II error avoidance might be another consideration in the discussion. In this article the emphasis on the type of error, I’m of the opinion that the nature of the environment/institution/mindset has to be considered in the mix.

I’ve presented the idea at the website below:

http://achemistinlangley.blogspot.com/2015/01/issues-in-communicating-climate-risks.html

Interesting and professional review of statistics. It is fascinating that the climate concerned are so often found needing to change the way science is done to make their case.

Interesting and professional review of statistics. It is fascinating that the climate concerned are so often found needing to change the way science is done to make their case.

… What if we have evidence to support a cause-and-effect relationship? Let’s say you know how a particular chemical is harmful; for example, that it has been shown to interfere with cell function in laboratory mice. Then it might be reasonable to accept a lower statistical threshold when examining effects in people…

I’m not a professional statistician, but this quote rather shocked me. Oreskes seems to be saying that because a mechanism for a correlation between two variables can be asserted, statistical examination ought to be modified to make a finding of significance more likely.

But a hypothetical mechanism can ALWAYS be postulated for ANY correlated variables, and SOME evidence can be adduced. In the case of Climate Change, rather famously, the proposed radiation augmentation mechanism required a ‘tropospheric hot-spot’ – this was never found, and so the hypothesis ought to have been invalidated at that point. But the momentum was so great that this experimental disproof was simply ignored, and the hypothesis remains standing. The ‘Pause’ is also an effective disproof, and again it is ignored or argued away with bluster and smear tactics.

If this is to be an established pattern, than ANY socially-accepted casual hypothesis could demand that statisticians simply pass it through as proven. As it is I see little questioning from statisticians of ever-more-extreme statistical assertions in the newspapers. In particular, statisticians had a woeful track record in examining the use of PCA in Mann et al (1999) It now looks as if statisticians, having shown that they will not subject this science to robust enquiry, are now going to be required to support it regardless…

… What if we have evidence to support a cause-and-effect relationship? Let’s say you know how a particular chemical is harmful; for example, that it has been shown to interfere with cell function in laboratory mice. Then it might be reasonable to accept a lower statistical threshold when examining effects in people…

I’m not a professional statistician, but this quote rather shocked me. Oreskes seems to be saying that because a mechanism for a correlation between two variables can be asserted, statistical examination ought to be modified to make a finding of significance more likely.

But a hypothetical mechanism can ALWAYS be postulated for ANY correlated variables, and SOME evidence can be adduced. In the case of Climate Change, rather famously, the proposed radiation augmentation mechanism required a ‘tropospheric hot-spot’ – this was never found, and so the hypothesis ought to have been invalidated at that point. But the momentum was so great that this experimental disproof was simply ignored, and the hypothesis remains standing. The ‘Pause’ is also an effective disproof, and again it is ignored or argued away with bluster and smear tactics.

If this is to be an established pattern, than ANY socially-accepted casual hypothesis could demand that statisticians simply pass it through as proven. As it is I see little questioning from statisticians of ever-more-extreme statistical assertions in the newspapers. In particular, statisticians had a woeful track record in examining the use of PCA in Mann et al (1999) It now looks as if statisticians, having shown that they will not subject this science to robust enquiry, are now going to be required to support it regardless…

The biggest problem with climate is that it’s clearly a type of 1/f type noise and unless I’m very much mistaken, there’s almost nothing written about appropriate statistical tests for the kind of case.

To put it simply: one cannot know what is abnormal, until we know what is normal. And because we don’t have enough data to know how the climate behaves normally over the century long timescale, it is simply impossible to create any meaningful statistical test for “abnormality”.

For more thoughts see my website:

http://scottishsceptic.co.uk/2014/12/09/statistics-of-1f-noise-implications-for-climate-forecast/

http://scottishsceptic.co.uk/2014/12/09/natural-habitats-of-1f-noise-errors/

And for a bit of fun, see my 97% accurate climate forecast for the next century: http://uburns.com/

Well said! Climate scientists like Pierrehumbert admit they still struggle with the effects of ocean oscillations, and if the oceans are affecting observed “lulls in warming” then climate sensitivity to CO2 is much less than if lulls in warming are the presumed effects of aerosols. The PDO wasn’t named until 1997, yet their models were aliasing many of the PDO’s effects. In other words they still don’t know what normal is and all those statistics are useless!

The biggest problem with climate is that it’s clearly a type of 1/f type noise and unless I’m very much mistaken, there’s almost nothing written about appropriate statistical tests for the kind of case.

To put it simply: one cannot know what is abnormal, until we know what is normal. And because we don’t have enough data to know how the climate behaves normally over the century long timescale, it is simply impossible to create any meaningful statistical test for “abnormality”.

For more thoughts see my website:

http://scottishsceptic.co.uk/2014/12/09/statistics-of-1f-noise-implications-for-climate-forecast/

http://scottishsceptic.co.uk/2014/12/09/natural-habitats-of-1f-noise-errors/

And for a bit of fun, see my 97% accurate climate forecast for the next century: http://uburns.com/

Well said! Climate scientists like Pierrehumbert admit they still struggle with the effects of ocean oscillations, and if the oceans are affecting observed “lulls in warming” then climate sensitivity to CO2 is much less than if lulls in warming are the presumed effects of aerosols. The PDO wasn’t named until 1997, yet their models were aliasing many of the PDO’s effects. In other words they still don’t know what normal is and all those statistics are useless!

Prof. Oreskes cannot be excused for not understanding what standard deviation is. Even amateurs, like I am, who don’t understand the finer points about statistics, understand that p value thresholds are not mere ‘convention’.

She even kills her own reasoning with the chemical analogy. If we know the chemical is dangerous, that means that we ALREADY have a p value less than .05 that it is.

Prof. Oreskes probably doesn’t understand the difference between counting and measuring, either.

Prof. Oreskes cannot be excused for not understanding what standard deviation is. Even amateurs, like I am, who don’t understand the finer points about statistics, understand that p value thresholds are not mere ‘convention’.

She even kills her own reasoning with the chemical analogy. If we know the chemical is dangerous, that means that we ALREADY have a p value less than .05 that it is.

Prof. Oreskes probably doesn’t understand the difference between counting and measuring, either.

We’ve seen so many statistical and logical pitfalls in the global warming debate. Consider:

Was the p-value calculated based on a one-sided test? If so, that’s already a statistical mistake. CO2 has a fertilizing effect on earth’s biomass, which is a mechanism for cooling the earth. Thus, a harder-to-satisfy two-sided test should have been performed.

A 0.05 significance level is a mere measure of correlation. That correlation doesn’t tell us if A causes B, if B causes A, or A and B share a cause, C.

“A 0.05 significance level is a mere measure of correlation. ”

I used sloppy language, but my point remains: Correlation may have multiple explanations.

We’ve seen so many statistical and logical pitfalls in the global warming debate. Consider:

Was the p-value calculated based on a one-sided test? If so, that’s already a statistical mistake. CO2 has a fertilizing effect on earth’s biomass, which is a mechanism for cooling the earth. Thus, a harder-to-satisfy two-sided test should have been performed.

A 0.05 significance level is a mere measure of correlation. That correlation doesn’t tell us if A causes B, if B causes A, or A and B share a cause, C.

“A 0.05 significance level is a mere measure of correlation. ”

I used sloppy language, but my point remains: Correlation may have multiple explanations.

Nowadays scientists do not live monastic lives, but they do practice a form of self-denial, denying themselves the right to believe anything that has not passed very high intellectual hurdles.

Gosh, what can one say? She is advocating religious fervor while chastising religion. Her essay is chaos trying to pose as reason.

Nowadays scientists do not live monastic lives, but they do practice a form of self-denial, denying themselves the right to believe anything that has not passed very high intellectual hurdles.

Gosh, what can one say? She is advocating religious fervor while chastising religion. Her essay is chaos trying to pose as reason.

“SCIENTISTS have often been accused of exaggerating the threat of climate change, but it’s becoming increasingly clear that they ought to be more emphatic about the risk.”

What, calling for ‘deniers’ to be put in prison, on trial, fired, have degrees revoked, banned from publishing in certain journals or out-an-out killed is not emphatic enough?

What the ..heck.. does this woman want to do in the name of science? Lobotomies followed by slavery?

“SCIENTISTS have often been accused of exaggerating the threat of climate change, but it’s becoming increasingly clear that they ought to be more emphatic about the risk.”

What, calling for ‘deniers’ to be put in prison, on trial, fired, have degrees revoked, banned from publishing in certain journals or out-an-out killed is not emphatic enough?

What the ..heck.. does this woman want to do in the name of science? Lobotomies followed by slavery?

“What, calling for ‘deniers’ to be put in prison, on trial, fired, have degrees revoked, banned from publishing in certain journals or out-an-out killed is not emphatic enough?”

Would you please provide evidence to support your claim? What scientist has demanded the imprisonment of deniers? Let’s not fall for media propoganda.

Senator Whitehouse from Rhode Island has advocated prosecuting Deniers with the RICO statutes.

“What, calling for ‘deniers’ to be put in prison, on trial, fired, have degrees revoked, banned from publishing in certain journals or out-an-out killed is not emphatic enough?”

Would you please provide evidence to support your claim? What scientist has demanded the imprisonment of deniers? Let’s not fall for media propoganda.

Senator Whitehouse from Rhode Island has advocated prosecuting Deniers with the RICO statutes.

In my own analysis of the UAH lower troposphere data, I don’t see anything remotely approaching statistical significance in the warming.

I’ve posted an article about it on my (new) blog called GreenHeretic.com. I’m mostly going to be posting about energy, not environment, but I started with asking and answering the question, has the warming we have seen been statistically significant?

http://greenheretic.com/has-warming-been-statistically-significant/

The key is understanding model diagnostics. The trendline appears to be clear. However, when you test for serial correlation of errors (D-W), the trend model fails miserably. When you apply a simple ARIMA(1,1,0), you get a clear answer to the question. There’s nothing there.

In my own analysis of the UAH lower troposphere data, I don’t see anything remotely approaching statistical significance in the warming.

I’ve posted an article about it on my (new) blog called GreenHeretic.com. I’m mostly going to be posting about energy, not environment, but I started with asking and answering the question, has the warming we have seen been statistically significant?

http://greenheretic.com/has-warming-been-statistically-significant/

The key is understanding model diagnostics. The trendline appears to be clear. However, when you test for serial correlation of errors (D-W), the trend model fails miserably. When you apply a simple ARIMA(1,1,0), you get a clear answer to the question. There’s nothing there.

Let’s say one was tasked to publish a research journal about the sleeping habits (e.g. how long, how often she woke up, when REM occurred, etc.) of a lady named Martha that is exactly 100 years, 2 minutes and 10 seconds old. Unfortunately, only the last 2 minutes 10 seconds of data documenting her sleeping habits exists.

Answer the following:

Is a 130 second period of data, out of a 100 year (3.1+ billion seconds) period, a valid or invalid sample size?

Using the same 130 second snippet, would it be logical for one to dogmatically claim that Martha’s sleep pattern is x, it may or may not have been y, and we therefore must do z or Martha dies?

Let’s say one was tasked to publish a research journal about the sleeping habits (e.g. how long, how often she woke up, when REM occurred, etc.) of a lady named Martha that is exactly 100 years, 2 minutes and 10 seconds old. Unfortunately, only the last 2 minutes 10 seconds of data documenting her sleeping habits exists.

Answer the following:

Is a 130 second period of data, out of a 100 year (3.1+ billion seconds) period, a valid or invalid sample size?

Using the same 130 second snippet, would it be logical for one to dogmatically claim that Martha’s sleep pattern is x, it may or may not have been y, and we therefore must do z or Martha dies?