What Can Statistics Tell Us About Deflategate?

Both the New England Patriots and quarterback Tom Brady were recently punished by the National Football League for the strong suspicion of deflating footballs in a contest against the Indianapolis Colts. Driving the fines and suspensions was a 243-page document, the Wells Report, which includes a section that uses advanced statistical techniques to compare pounds-per-square-inch (PSI) values from each team’s footballs.

How was statistics used in the Wells Report, and what are sources of uncertainty in the results?

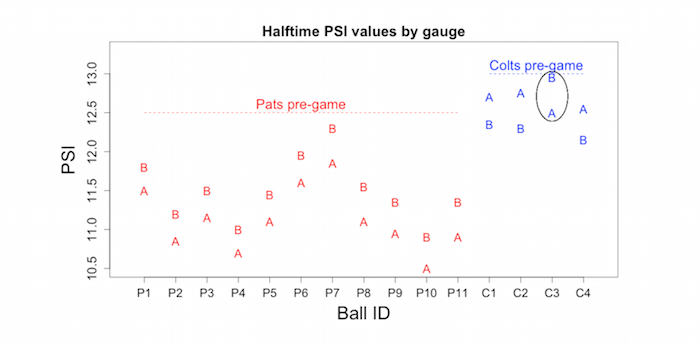

NFL regulations require footballs to be inflated between 12.5 and 13.5 PSI, but by halftime, several of New England’s balls had dropped below the 12.5 cutoff. The data in the Wells Report, analyzed by consulting company Exponent, compares drops between the pre-game PSI measurements of each team’s footballs – 12.5 for New England, 13.0 for Indianapolis – with halftime ones. Here’s a figure with the halftime PSI’s for each of the balls that were measured, separated by gauge label (A or B). The horizontal red and blue lines indicate the pre-game PSI levels.

The graph shows that the PSI levels of New England footballs dropped by a larger amount than Indianapolis ones, relative to each team’s pre-game level, as the vertical distance from the pre-game values to the halftime PSI measurements is much greater for the Pats than the Colts. On page 171, the report uses what’s known as a linear mixed effects model to assign about a 0.42 percent probability (1 in 240) that if chance variation alone is at play, we would observe these differences in the drops in PSI rates..

Such statistical claims against the Patriots are damning; the cutoff for significance is generally 5 percent (1 in 20) or 1 percent (1 in 100), and this probability falls well below both thresholds. In other words, the generally accepted norm is that if a random event is this unlikely to occur (less than a 5 percent or 1 percent threshold) then we reject the notion that we’re only observing chance fluctuations. Because this probability (0.42 percent) is so low, the results add context to other non-statistical evidence suggesting that the Patriots more than likely altered the PSI levels.

So the Pats are dead in the water, right?

One aspect left out of the report are the assumptions governing both the data and the statistical model, and contrasting these assumptions provides a sense of how different the results might look under different scenarios.

The first assumption made in the Wells Report regards the gauge assignment of each measurement. As outlined on SI.com and apparent in the figure above, it appears unlikely that the assigned gauges for each team are accurate, as football C-3 does not match the differences in PSI recordings of other Colts balls. The report admits as much on page A-8, and considers the possibility that measurements taken on the third football were invalid. One way this could have happened would be if the two different gauge measurements had been switched. Another possibility is that the PSI measurements on this football were recorded incorrectly. If the actual values were a little lower than recorded, the difference between the drop in PSI of the Colts’ balls and the drop of the Patriots’ PSI levels may not have been large enough to result in a suspiciously low probability of occurring.

It was a sordid story of murder and intrigue in which a young nurse, Kristen Gilbert, was accused of killing her patients at the Veterans Administration medical center in Northampton, Massachusetts. The evidence was, at first, circumstantial: suspicious coworkers, missing vials of adrenaline, and more deaths during her shift than seemed normal.

The trends were not obvious day-to-day, but over the course of several years, a pattern emerged. When Gilbert worked during the night shift, the number of deaths during the night shift increased; when she worked the evening shift the number of deaths during the evening shift was higher than usual. Over the period of time in question, there were approximately nine deaths for every 200 eight-hour shifts when Gilbert was not working. Over the 257 shifts she worked, there were 40 deaths. What was the the chance that the hospital would see this many deaths (or more) when Gilbert is on-duty if the deaths were occurring randomly?

As one of the statistical experts assigned to the case, Professor Stephen Gehlbach of the University of Massachusetts noted, the chance came out to less than one in a million. It was next to impossible that the deaths were simply random fluctuations of data. Based on this report and other evidence, Gilbert was indicted to stand trial in the deaths of three patients (and the attempted murder of four others). But the analysis was not used as evidence in the trial because the statistical conclusions only determined the likelihood that the deaths could occur randomly; they could not point to what caused such unlikely events.

Plus, the ease with which a juror might conclude from “the chance of this occurring by chance are 1 in a million” that “the chance that Gilbert is innocent is 1 in a million” also made the statistical conclusions prejudicial; people wouldn’t have beeen able to consider other possible causes of the deaths, or whether the evidence against Gilbert was convincing.

Statistics can only say so much: under careful and controlled assumptions, we can speak to the probability of an event occurring. We cannot use statistics so easily to convict people (or, for that matter, football teams). Kristen Gilbert was convicted using non-statistical evidence, and is serving out four life sentences for her crimes.

Rebecca Goldin

A second assumption regards the starting values for each team’s pre-game PSI values. Because the differences in deflation drops between the two gauges range from 0.20 to 0.45, it’s safe to assume that there is measurement error in taking a single PSI recording. However, the report fixes each ball’s pre-game PSI for each team. For the Colts, the report uses a level of 13.0, arguing on page 3 that ‘most of the (12) Colts game balls tested by Anderson prior to the game measured 13.0 or 13.1 psi.’ Yet if it the PSI measured at 13.1 rather than 13.0, the vertical difference between the Colts’ original measurement and the one done at half time would be larger. A larger drop in the Colts’ balls would suggest that the drop in PSI of the Patriots’ balls is less surprising.

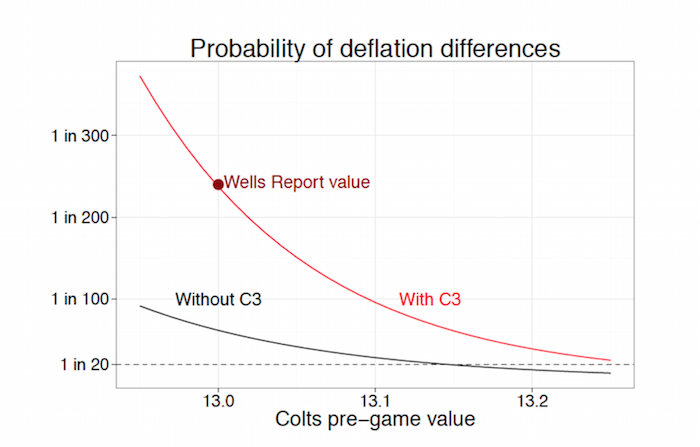

In fact, we can quantify the likelihood of seeing the differences (between the Patriot drop and the Colt drop in PSI levels) as a function of that initial PSI level for the Colts’ balls. Here’s a plot showing how much the report’s conclusions can vary under alternative pre-game readings from Indianapolis. The red line shows results including all four Colts footballs, while the black line shows results after dropping football C3.

Varying pre-game recordings by just a few tenths of a PSI produce drastic differences in the resulting probabilities. Pre-game values a few tenths of a PSI above 13.0 make it substantially more reasonable to have

observed the results recorded at halftime; a few tenths below 13.0, and the likelihood reaches near impossible levels. The 0.42 percent conclusion is also heavily dependent on the inclusion of the C3 football.

Despite uncertainty from the referees in charge of measuring pre-game levels, as well as measurement error and inconsistencies in gauge readings, the Wells Report does not show results under different pre-game assumptions. While statistics helped to define the probability of the observed PSI drops, it can be argued that statistics should have also been used to clarify exactly how much uncertainty remains with respect to the data collection and model assumptions.

The figure above only considers variations in the Colts pre-game values, and not the Patriots footballs. Additional issues include the measurement error for each reading, as well as the rounding of PSI values to the nearest 0.05 level. Accounting for each of these factors would tend to increase the uncertainty in the obtained conclusions, which could make it more reasonable for the Patriots halftime measurements to have dropped by so much.

Further, there’s also the question of whether or not the statistical model used was appropriate to begin with; for the technically inclined, you can look at a plot of the model’s residuals, which should show evenly distributed scatter, by clicking here. As it turns out, the linear model used in the Wells Report may not have been appropriate to begin with. In such scenarios, alternative statistical procedures, including nonparametric approaches, are recommended.

Assumptions aside, it is important to recognize that the 0.42 percent is not New England’s probability of innocence. Statistics cannot assign guilt (see sidebar).

Nor can a causal connection between football PSI levels and purposeful deflation be claimed using this data, given that the data was not collected in the way it would have been collected in a controlled study design. Indeed, in their response to the Wells Report, the Patriots claimed that both an additional stress on their footballs and changes in football upkeep could have caused the difference in PSI drops observed at halftime.

From a statistical standpoint, perhaps the lesson here lies not in deflated football probabilities, but in sample size and assumptions. In part because there’s a small sample of footballs, random error and variations in the assumptions can easily change the Wells Report’s 1 in 240 to either a 1 in 24 or a 1 in 2400. The assumptions you make drive your conclusions.

For such a high profile case, it is perhaps disheartening to admit that statistics cannot give us an exact answer, and that our answers can be tweaked by various inputs and prior beliefs. But interpreting and understanding this uncertainty and ambiguity is part of what defines the science of learning from data. And so the use of statistics here is not that it gives us one answer, but that it tells us that the one answer is not enough.

Michael Lopez is an Assistant Professor of Statistics at Skidmore College. His primary research area is in causal inference, but he also interested in statistical applications to sports, writing actively on his blog, StatsbyLopez.com.

Please note that this is a forum for statisticians and mathematicians to critically evaluate the design and statistical methods used in studies. The subjects (products, procedures, treatments, etc.) of the studies being evaluated are neither endorsed nor rejected by Sense About Science USA. We encourage readers to use these articles as a starting point to discuss better study design and statistical analysis. While we strive for factual accuracy in these posts, they should not be considered journalistic works, but rather pieces of academic writing.

I have completed a working paper containing statistical analysis exploring the likelihood of data Wells Report being manipulated, with fairly significant results. I’ve sent it to statistics departments of major universities for their review. If you are interested in me sending you a .pdf copy (12 pages), feel free to send me an e-mail address that I can send it to.

Can you please forward me a copy of your report.

I’d love a copy of your analysis Robert.

Oh, puhleaze! Just read an article by yourself defending the Wells report statistics suggesting that the Pats’ balls were underinflated relative to the Colts’ balls. This current article represents significant backtracking on your part. Let’s use our thinking caps for a second regarding Wells deceitful and bogus use of statistics and your use of bogus data regarding “deflategate”:

1) Single measurements of physical parameters taken using uncalibrated instruments are not accepted as valid by any scientific body on this planet (see also intercepted ball pressures for typical spreads due to multiple measurements).

2) Just what is the standard deviation of a single point?

3) The starting pressures were never recorded (see PV=nRT). The times and temperatures were not recorded. It was not established which gauge was used with gauge measurements consistently varying by + .3-.45 psi for the same ball depending upon which gauge was used. There are no believable pressures, period. Can’t do statistics regarding ideal gas law predictions, or any statistics e.g. variance analysis using imaginary numbers, sorry.

4) Again, the gauges used for stated pressures were not calibrated nor measured to be accurate over the relevant temperature ranges.

5) Wells switched two Colts’ balls psi values that were taken with two different gauges simply because he felt that the data was misrecorded. This type of action is an instant F in any science class and proclaims outright FRAUD. The uh I felt that the measurement belonged to the other gauge is deceitful (also for alternative explanation see error bars, results of three measurements of the intercepted ball i.e. 11.45, 11.35 and 11.75 = mean of 11.52 psi, standard error = +/- .12 psi, assuming the intercepted ball was not tampered with). There are also statistical tests which can be used to assign probability of outliers, which is why multiple measurements are made of physical parameters.

6) The Wells report does not accurately address issues affecting pressure such as temperature, time and effects of ball handling, evaporative cooling due to wet balls, preparation of footballs, etc.

The data in the Wells report is crap, the analysis is crap and the conclusions are crap. Not only that, but the whole treatment of data in the Wells report would probably form a basis to make an accusation of malice in any defamation court case, along with Wells outright lie that he had direct evidence of Brady’s guilt.

Cheers. Recommend: Take Chemistry Quantitative Analysis and physical chemistry courses so you don’t sound like a complete nitwit. The statistical analysis of imaginary numbers is just that: imaginary.

Please retract your defective statistical analysis of bogus data regarding deflategate ball pressures. Your erroneous analysis is incorrect on so many levels it is embarrassing.

Read the AEI report for correct statistical analysis.

https://www.aei.org/publication/on-wells-report/

Its is unlikely that the balls were measured in the order listed in the Exponent appendix of the Wells report. Figure 22 of that report show that the pressure of the balls increases quickly when brought into a warmer environment like the refs locker room. Indeed they recover approximately 75% of their pre-game pressure in eight minutes. Yet the Exponent Table 2 shows the lowest pressure as be second to last; it was most likely the coldest. And the first measurement was for the third highest pressure; it was probably close the being the third warmest. The Exponent report ordering almost impossible given the rapid change in pressure during this time period. If one plots all the ball pressures (Pats and Colts) in the order of the pressures measured you get a completely different picture. In such a plot the pressures of the Colts’ balls are just a continuation of the pressures of the Pats’ balls. This shows there is nothing special about the balls of either team.

This also solves the dilemma of why there is so much variation in the Pat’s measurements of balls that were assumed to all start very close to 12.5 psi. It is mostly due to the warming up process.

Read the AEI report for correct statistical analysis.

https://www.aei.org/publication/on-wells-report/

Its is unlikely that the balls were measured in the order listed in the Exponent appendix of the Wells report. Figure 22 of that report show that the pressure of the balls increases quickly when brought into a warmer environment like the refs locker room. Indeed they recover approximately 75% of their pre-game pressure in eight minutes. Yet the Exponent Table 2 shows the lowest pressure as be second to last; it was most likely the coldest. And the first measurement was for the third highest pressure; it was probably close the being the third warmest. The Exponent report ordering almost impossible given the rapid change in pressure during this time period. If one plots all the ball pressures (Pats and Colts) in the order of the pressures measured you get a completely different picture. In such a plot the pressures of the Colts’ balls are just a continuation of the pressures of the Pats’ balls. This shows there is nothing special about the balls of either team.

This also solves the dilemma of why there is so much variation in the Pat’s measurements of balls that were assumed to all start very close to 12.5 psi. It is mostly due to the warming up process.